Quantum computing’s “two-hour run” shocks the field — and resets expectations

From fragile lab demo to continuous operation

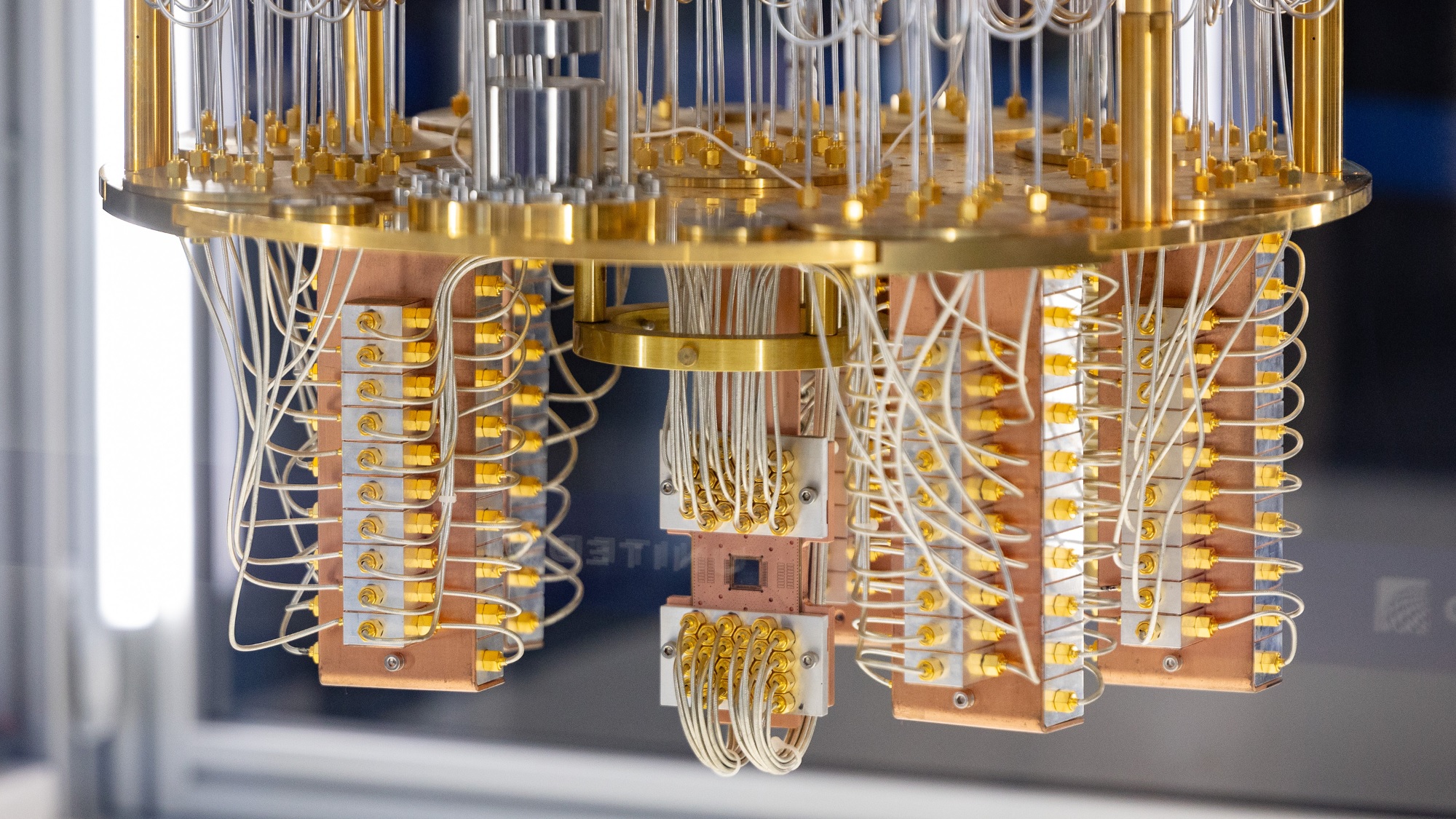

A Harvard–MIT research team says it has operated a quantum processor continuously for more than two hours without catastrophic loss of its quantum state — a milestone that insiders thought was still years away. The group credits new error-suppression techniques and improved isolation that sharply slowed “atomic loss,” the tendency of qubits to drift or decohere. In plain terms, instead of getting only seconds of reliable performance before noise overwhelms the system, the machine stayed stable long enough to run complex routines end to end. Researchers are now openly talking about “practical quantum workflows,” not just physics experiments, and some are predicting near-continuous machines within three years.

Why does that matter? Traditional supercomputers work with regular bits, which are either 0 or 1. Quantum bits, or qubits, can exist in more than one state at once. That lets them explore huge numbers of possibilities in parallel, which is valuable for chemistry, materials science, code-breaking, advanced logistics, and drug discovery. But qubits are famously delicate. Hold them too long and they fall apart. The two-hour benchmark doesn’t mean quantum computers are about to replace cloud data centers — they are still exotic, expensive, and extremely hard to scale. It does mean the sector is leaving the “look, we made it work for 10 seconds” era and entering “we can actually run a job” territory.

Now the pressure shifts to commercialization

This leap will intensify competition between U.S. labs, big cloud companies, and specialized quantum startups. If you can run a quantum job for hours instead of seconds, you can start selling time on that system to pharma companies, battery researchers, and defense contractors. That is exactly the market pitch behind today’s quantum race: not a machine on every desk, but a handful of ultra-secure, ultra-specialized systems accessed like supercomputing clusters.

It also creates new policy headaches. Governments are already funding “post-quantum” cryptography — secure codes designed to survive quantum attacks — because they assume future quantum systems could crack current encryption. If stable quantum hardware really is arriving faster, regulators will likely push standards even sooner. This is why quantum is suddenly showing up not just in science conferences but in national security briefings and corporate boardrooms. The conversation is shifting from “if” to “when” — and, increasingly, to “who controls it.”