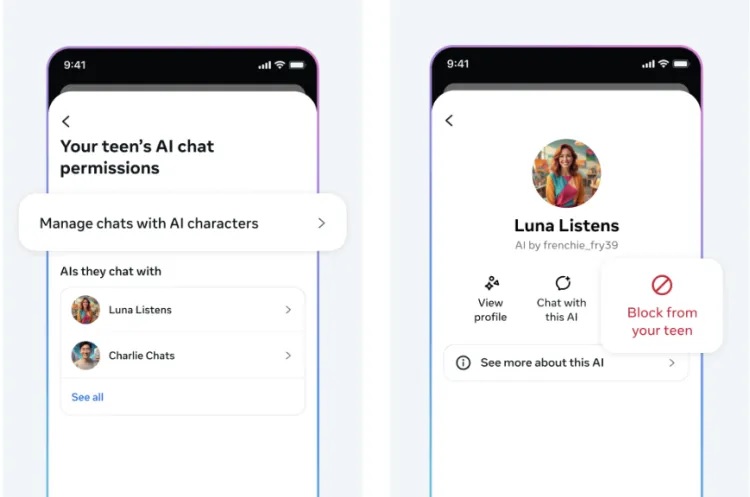

META ADDS PARENTAL CONTROLS FOR TEENS’ AI CHATS

New guardrails across Instagram, Messenger and Facebook

Meta is rolling out parental controls to limit or fully block teen conversations with AI chatbots. Supervisors can be alerted when a teen initiates an AI chat, set quiet hours, and restrict specific characters, while Meta’s core assistant remains age-gated. The features plug into Family Center dashboards and start in English in the U.S., U.K., Canada and Australia before a wider expansion. The move follows months of pressure over inappropriate AI exchanges and broader teen safety concerns online.

What changes for families and platforms

For parents, the controls add a middle ground between “off” and “unrestricted,” alongside clearer disclosures so teens know they’re speaking to an AI. Meta says safety systems have been tuned to deflect sensitive queries and reduce hallucinations, but enforcement will be key—particularly around alt accounts and cross-app settings. Regulators will watch how usage data is stored and audited, and whether labels and limits measurably reduce harmful prompts. If well-implemented, the tools could become a template for other social apps struggling to balance experimentation with adolescent safeguards.